A Ticking Time Bomb?

January 21, 2022 | Expert Insights

Artificial Intelligence is one of the most disruptive technologies in the modern era. With increasing military applications, it heralds significant changes in the nature of warfare.

Background

During the Cold War era, the Americans and the Soviets had been extremely distrustful of each other. That being said, the two sides were able to work together and form the anti-ballistic missile treaty. This meant both sides agreed not to create any more anti-ballistic missile systems; the logic behind the treaty was that the best path to peace in a nuclear world would be the assurance of mutually assured destruction.

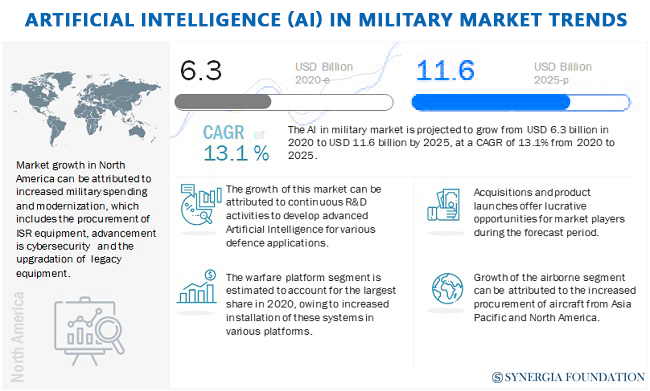

Today, machine learning and artificial intelligence threaten to upend this balance. A report released in November 2020 by the U.S. National Security commission explains that AI could reshape the global balance to the same degree that electricity had changed warfare and society in the 19th century. With an intensifying rivalry between the U.S. and China, Beijing has submitted a proposal to the United Nations, asking for the use of AI in warfare to be globally regulated.

Analysis

In the medical context, AI can detect tumours that radiologists would miss. In the military context, it could find 'needles in a haystack', such as the stealthiest submarines and helicopters, undermining the concept of nuclear deterrence.

Recognizing this potential, China has been pumping billions of dollars into AI and hopes to be the leader in this space by 2030. Experts agree that AI will be the foundation of the PLA's mission to create a world-class military that directly rivals the Americans. The PLA air force already uses AI-simulated opponents during pilot combat training. In the future, this will be used to assist pilots in decision-making.

Even as they increase their investments in AI, Chinese officials are aware of its inherent risks. As a result, they have initiated discussions with U.S. and European governments about these threats. In a document presented to the United Nations, Beijing had remarked as follows - "In terms of strategic security, countries, especially major countries, need to develop and apply AI technology in the military field in a prudent and responsible manner, refrain from seeking absolute military advantage, and prevent the deepening of strategic miscalculation, undermining of strategic mutual trust, escalation of conflicts, and damaging global strategic balance and stability".

It is worth remembering that autonomous weaponry is said to be the third revolution in warfare (the first being gunpowder and the second being nuclear weapons). We have already moved from land mines to guided missiles. With the introduction of autonomous weapons, people can be targeted and killed without any human involvement. Another consequence of AI and robotics is that creating weapons has become cheaper and significantly more accessible.

Naturally, this has raised significant concerns about the ethics of using AI in warfare. As articulated by UN Secretary-General António Guterres said, "the prospect of machines with the discretion and power to take human life is morally repugnant." Unlike humans, AI will not have the ability to reason across domains, and this limitation means that an autonomous weapons system will not fully understand the consequences of its actions.

If the proliferation of AI-based weapons is allowed to continue unabated, it will only be a race to oblivion. A significant challenge that was not present in the previous arms race is the aspect of verifications. Inspectors could easily verify if countries were violating agreements by building nuclear warheads in the past. As far as AI is concerned, monitoring the level of advancement in algorithms will be significantly more cumbersome.

Specific solutions have been proposed to mitigate the adverse impact of AI in warfare. The first is to ensure there is always human guidance; the final decisions should be made by a human, even if this means that you may have to forgo certain benefits of having a fully automated system, such as speed. The second proposal is a total ban on the development of AI beyond a specific limit. 'The campaign to stop killer robots' is a cause that has been promoted by eminent people such as Elon Musk, Stephen Hawking and other experts in the field of AI. The third alternative is to regulate the use of military AI instead of enacting a blanket prohibition. Of course, all three solutions come with their own limitations in relation to enforcement and verification. However, sustained negotiations around these areas will be critical to a more safe and secure world.

Counterpoint

Many argue that automated weapons can save the lives of countless soldiers, who are currently putting their lives on the line to fight wars. It is also argued that AI's precision in warfare will actually reduce the amount of damage inflicted on civilian lives and properties as attacks become more targeted and accurate.

Assessment

- The U.S. and China are currently engaged in a fierce race for AI supremacy. However, both sides seem to have an informed understanding of the risks involved, prompting them to explore modalities for cooperation.

- Although AI systems can work well in controlled domains, they may be prone to errors under adversarial conditions. This can translate to devastating losses on the battlefield, which countries can ill-afford. As a result, an international regulation on the military uses of AI is in the larger interest of all stakeholders.

Comments