Mercenaries of Disinformation

August 31, 2021 | Expert Insights

Amidst the havoc wreaked by COVID-19, the world has been grappling with a second pandemic in the form of online disinformation. Most recently, a report by Max Fisher in the New York Times has revealed alarming details about a virtual, ‘disinformation-for-hire’ economy. Catering to political parties, governments and private organisations, this refers to the burgeoning industry of ‘black public relations (PR) firms’, which deploy fake accounts and false narratives to manipulate online opinion in exchange for money.

Background

Influence operations, which lie at the intersection of information fabrication and discourse management, is hardly a novel concept. As early as in 34 BCE, Caesar Augustus – the first Roman emperor, had organised a sustained propaganda campaign against Mark Antony to smear his reputation before the Roman public.

Years later, the invention of the Gutenberg printing press had drastically amplified the breadth and reach of such disinformation tactics. In fact, the evolution of influence operations was concomitant with the discovery of new communication technologies like the telephone, radio, and television. State-sponsored propaganda, which sought to manage public opinion, was increasingly deployed during the twentieth century. For instance, in World War I, U.S. President Woodrow Wilson had created a ‘Committee on Public Information’ that launched a sweeping propaganda blitzkrieg, justifying America’s entry into the war.

However, the privatisation of disinformation was most discernible in the Cold War period. Covert operatives on either side of the Iron Curtain had used front organisations to peddle their political narratives. While some of them were directly run by intelligence organisations, others had been operated by professional contractors. With the invention of the internet, this 'professionalisation of deception' has now taken over the virtual world.

Analysis

In recent times, online disinformation operations attributed to nation-states like Russia or China have caught the attention of financially motivated individuals and groups. According to media reports, in the runup to the 2016 U.S. Presidential elections, private actors had raked in massive profits by pushing political propaganda through fake news sites and online troll farms.

In fact, researchers at Oxford University have identified around 48 countries where private firms work with governments and political parties to fuel disinformation campaigns. It is estimated that nearly $60bn is spent on such contracts with private organisations. Owing to the surplus of cheap digital labour in the Asia–Pacific, such shadow industries are believed to be concentrated in this region. However, social media groups like Facebook and Twitter have unearthed a network of marketing firms in other countries, such as the UAE, Nigeria, Saudi Arabia and Egypt, that target public debates in the Middle East and Africa.

Irrespective of their geographical location, most ‘black PR’ groups operate as independent consultants or entities offering social media marketing services. With demonstrable competence in an array of digital platforms, they sow discord and push viral conspiracies, exploiting filter bubbles and existing user bias. Moreover, the growth of artificial intelligence and automated bots have rendered these disinformation tactics more sophisticated, as traffic can be generated at a much faster rate than usual.

Some of the commonly employed techniques, as reported in the Financial Times, include the buying up of comment space, narrative laundering and the boosting of visibility by artificially linking keywords to search terms. In doing so, PR firms can also obscure the involvement of ultimate beneficiaries who have hired their services in the first place.

Apart from government or government-affiliated actors, these services are used by private organisations in highly competitive corporate environments. To inflict financial and reputational damage on each other, rival firms may conclude contracts with trolls, profiteers or foreign actors on the dark web. For example, an investigation by the cyber-security company - Recorded Future has revealed that there are public relations firms in Russia that bolster the image or smear the reputation of private companies in return for a small fee. Besides deploying fake accounts on social media networks, they can plant news articles in legitimate English-language media outlets.

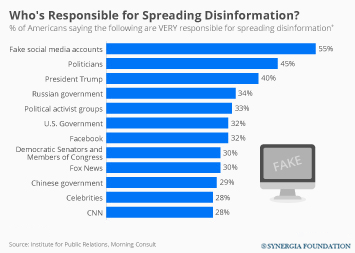

Given this reality, the quality of civic discourse is under severe threat, as public opinions can be coloured by fake news and unsubstantiated facts. A manipulated information environment does not bode well for democratic countries, with citizens being deprived of the right to exercise their independent judgement about political choices and decisions. Owing to the discursive power of digital platforms, therefore, the onus is on social media companies and intermediaries to proactively tackle disinformation through content moderation policies and shutting down fake accounts.

Counterpoint

While social media companies may have the primary duty to take action against online manipulation, governments cannot shirk their own responsibilities. By cooperating with stakeholders in the tech industry as well as civil society, state actors can incentivise an ecosystem for ethical content creation. Public investment in disinformation research is another way to tackle this pervasive problem.

However, the securitisation of disinformation should not operate as a pretext for enabling censorship and surveillance.

Assessment

- Although private organisations hire black PR firms, disinformation services are most often exploited by nefarious political actors. As a result, legal frameworks should incorporate transparency norms that subject political funding to public scrutiny, especially in relation to advertising and PR exercises.

- As far as digital platforms are concerned, they should constitute oversight committees that manage account bans, supervise content moderation policies and participate in public reporting. To identify whether social media campaigns are driven organically or by malicious actors, they also need to invest in sophisticated attribution technologies.

Comments