Facebook newsfeed changes

January 22, 2018 | Expert Insights

Facebook is taking another step to try to make itself more socially beneficial, saying it will boost news sources that its users rate as trustworthy in surveys.

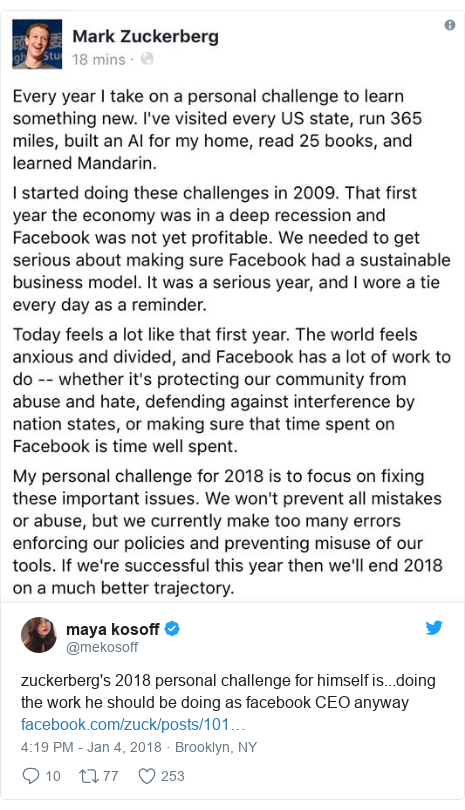

Social media experts have expressed doubts over the effectiveness of Facebook's plan to combat fake news by surveying users on what news sources they find trustworthy. In a post on his personal page on Friday evening, Facebook's CEO and founder Mark Zuckerberg said he wanted to make sure news consumed on the platform was from "high quality and trusted sources".

Background

In May 2017 the news was released saying Facebook was a key influencer in the outcome of the 2016 US presidential election and the Brexit vote, according to those who ran the campaigns.

But critics say it is a largely unregulated form of campaigning.

Those in charge of the digital campaigns for Donald Trump's Republican Party and the political consultant behind Leave EU's referendum strategy are clear the social network was decisive in both wins.

Political strategist Gerry Gunster, from Leave EU, told BBC Panorama that Facebook was a game changer for convincing voters to back Brexit.

"You can say to Facebook, 'I would like to make sure that I can micro-target that fisherman, in certain parts of the UK, so that they are specifically hearing that if you vote to leave you will be able to change the way that the regulations are set for the fishing industry'.

Facebook CEO Mark Zuckerberg has vowed to "fix" Facebook, in what he described as his personal challenge for 2018.

In a post on his page on the social network, he said it was making too many errors enforcing policies and preventing misuse of its tools. Mr Zuckerberg has famously set himself challenges every year since 2009. Facebook launched in 2004.

Social media firms have come under fire for allowing so-called fake news ahead of US and other elections to spread.

Analysis

Facebook said that it would ask its users to tell it which news sources they read and trust to help it decide which ones should be featured more prominently. These responses will help “shift the balance of news you see towards sources that are determined to be trusted by the community,” CEO Mark Zuckerberg explained in a Facebook post.

Meanwhile, Twitter said in a blog post that it would email nearly 678,000 users that may have inadvertently interacted with now-suspended accounts believed to have been linked to a Russian propaganda outfit called the Internet Research Agency (IRA).

"There's too much sensationalism, misinformation and polarization in the world today," wrote Zuckerberg.

"Social media enables people to spread information faster than ever before, and if we don't specifically tackle these problems, then we end up amplifying them."

However, social media analysts had reservations over the effectiveness of the plan, questioning whether this was the best way to go about tackling the issue.

Technology analyst Larry Magid told Al Jazeera's NewsGrid that the survey risked taking into account opinions formed from prejudices against, or preference, for certain outlets instead of whether they were trustworthy or accurate.

"Simply because something is well liked by a percentage of the public, doesn't mean it's reliable," he said.

"There are people who love news sites that are objectively untrue - that doesn't require an opinion, that's something you can establish by fact," added Magid.

Matt Navarra, the Head of Social Media at The Next Web, tweeted: "Facebook announces it will start asking users to decide which publishers are trustworthy in order to filter out news content. The same Facebook users who constantly fail to spot Fake News and share it widely."

Not all analysts, however, were critical of the move.

Renee DiResta, policy lead at Data for Democracy, said Facebook's decision was "great news and a long time coming".

"Google has been ranking for quality for a long time, it's a bit baffling how long it took for social networks to get there," she wrote on Twitter.

Assessment

Our assessment is that concerns about so-called fake news have grown in tandem with the spread of social media. As the 2016 US Presidential election proved, millions look to the social networking site for news stories. Thus, increasing accountability will be a move in the right direction for Facebook. However, that it's not yet clear how effective this system will be in identifying trustworthy news sources.

Comments